Introduction: Why Docker?

Imagine this: you’ve spent days working on a project, wrestling with dependencies, and tweaking configurations. You finally get everything running perfectly on your machine, only to face a nightmare when deploying to a different environment. “Why doesn’t it work on the server?” you ask, pulling your hair out. Sound familiar?

Many developers have faced this frustration. You painstakingly create an environment on your local machine that seems perfect, but when it’s time to deploy, you encounter issues because of differences in environments, missing dependencies, or conflicting software versions.

Here’s where Docker steps in like a superhero for your development process. Docker eliminates the “it works on my machine” problem by allowing you to package your application with all its dependencies and configurations into a single, portable container. This means your code runs the same way in every environment—whether on your local machine, a testing server, or in production.

What is Docker?

Docker is a free, open-source platform that was unveiled to the public on March 13, 2013. It allows developers to package applications and their dependencies into isolated environments called containers. Think of Docker as a magical box that contains everything your application needs to run, making deployment as seamless as packing your bags for a trip—you just pick up and go!

Here’s a quick rundown of what Docker does:

- Isolates your application: Containers package your app with its dependencies, so it runs consistently across different environments.

- Saves time: No more setting up complex environments from scratch. Just deploy the container, and you’re set.

- Simplifies collaboration: Share your container with others, and they get the exact same environment you developed in.

Docker vs. Virtual Machines: The Not-So-Evil Twin

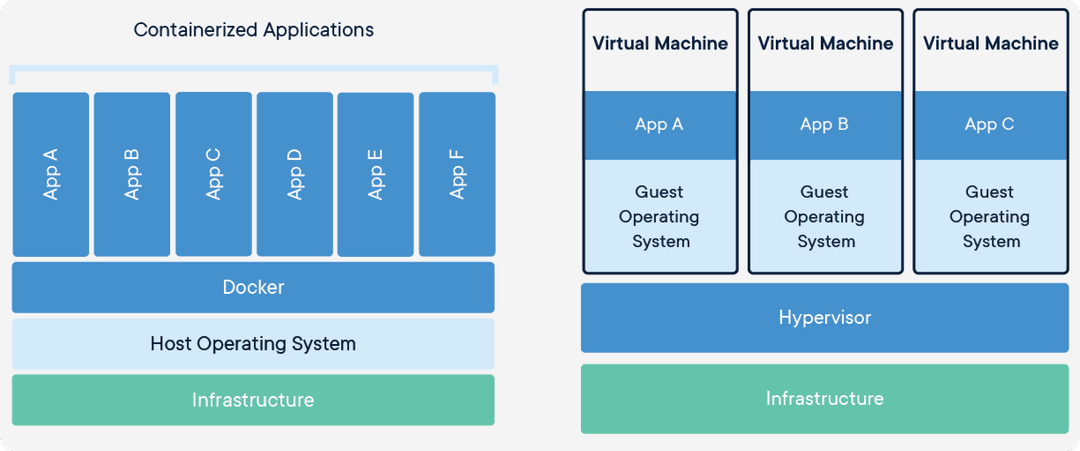

Let’s clear up the confusion between Docker and virtual machines (VMs). Although they both create isolated environments, they do so differently:

-

Virtual Machines (VMs): Think of VMs as complete, self-contained virtual computers. Each VM runs its own full operating system, including a separate kernel, which can be resource-heavy and slow to boot. They offer strong isolation but come with considerable overhead in terms of resources.

-

Docker Containers: Containers share the host operating system’s kernel but run isolated processes. This means they’re much lighter and faster—like having multiple applications running in their own private workspaces without needing a whole new computer for each. Containers use fewer resources and start up almost instantly.

(Image courtesy of docker.com)

In summary:

- VMs = Heavyweight, full OS, slower boot times, higher resource usage.

- Docker Containers = Lightweight, shared OS kernel, quick startup, lower resource consumption.

Why Use Docker as a Developer?

Docker is like a Swiss Army knife for developers. Here’s why you’ll love it:

- Speed: Containers start up in seconds. No more waiting for your environment to boot up.

- Portability: Run your container on any system—Windows, Mac, Linux—without worrying about compatibility issues.

- Isolation: Keep your development environment clean. Containers are self-contained and don’t affect each other.

- Consistency: Ensure your application runs the same everywhere. No more “but it works on my machine” excuses.

- Efficient: Containers are more resource-efficient than VMs, allowing you to run many containers on a single host without significant overhead.

Ready, Set, Docker! Let’s Create Your First Application

Ready to dive in? Let’s create a simple Node.js application and run it in Docker. This hands-on approach will help you see Docker in action.

Step 1: Install Docker

First, let’s get Docker installed on your machine. Here’s how you can do it:

For Ubuntu:

-

Uninstall old versions:

sudo apt-get remove docker docker-engine docker.io containerd runc -

Update and install dependencies:

sudo apt-get update sudo apt-get install \ apt-transport-https \ ca-certificates \ curl \ gnupg-agent \ software-properties-common -

Add Docker’s official GPG key:

curl -fsSL https://download.docker.com/linux/ubuntu/gpg | sudo apt-key add - -

Set up the stable repository:

sudo add-apt-repository \ "deb [arch=amd64] https://download.docker.com/linux/ubuntu \ $(lsb_release -cs) \ stable" -

Install Docker Engine:

sudo apt-get update sudo apt-get install docker-ce docker-ce-cli containerd.io -

Verify installation:

sudo docker run hello-world -

Enable Docker to start on boot:

sudo systemctl enable docker -

Manage Docker as a non-root user:

sudo usermod -aG docker $USER

For macOS and Windows: Follow the official installation guides on Docker’s website.

Step 2: Create Your Project

Create a new folder for your project and add two files:

app.js(your Node.js application code)Dockerfile(instructions to build your Docker image)

Your folder should look like this:

.

├── Dockerfile

└── app.jsStep 3: Write Your JavaScript File

Add this simple code to app.js:

#!/usr/bin/env node

console.log("Docker is magic!");This script will print "Docker is magic!" to your terminal, showing that Docker is working.

Step 4: Write Your Dockerfile

The Dockerfile is like a recipe for building your container. Here’s what it looks like:

# Use an official Node.js runtime as a parent image

FROM node:latest

# Set the working directory

WORKDIR /usr/src/app

# Copy the application code

COPY app.js .

# Define the command to run the application

CMD [ "node", "app.js" ]Step 5: Build Your Docker Image

Build your Docker image with:

$ docker build -t node-test .Here, -t tags your image with a name (node-test).

Step 6: Run Your Docker Image

Run your container with:

$ docker run node-testYou should see "Docker is magic!" in your terminal—congratulations, you’ve just run your first Docker application!

Under the Hood: How Docker Works

Curious about what’s happening behind the scenes? Here’s a peek:

Docker is a platform designed to make it easier to create, deploy, and run applications by using containers. Containers allow a developer to package up an application with all the parts it needs, such as libraries and other dependencies, and ship it all out as one package.

Key Components:

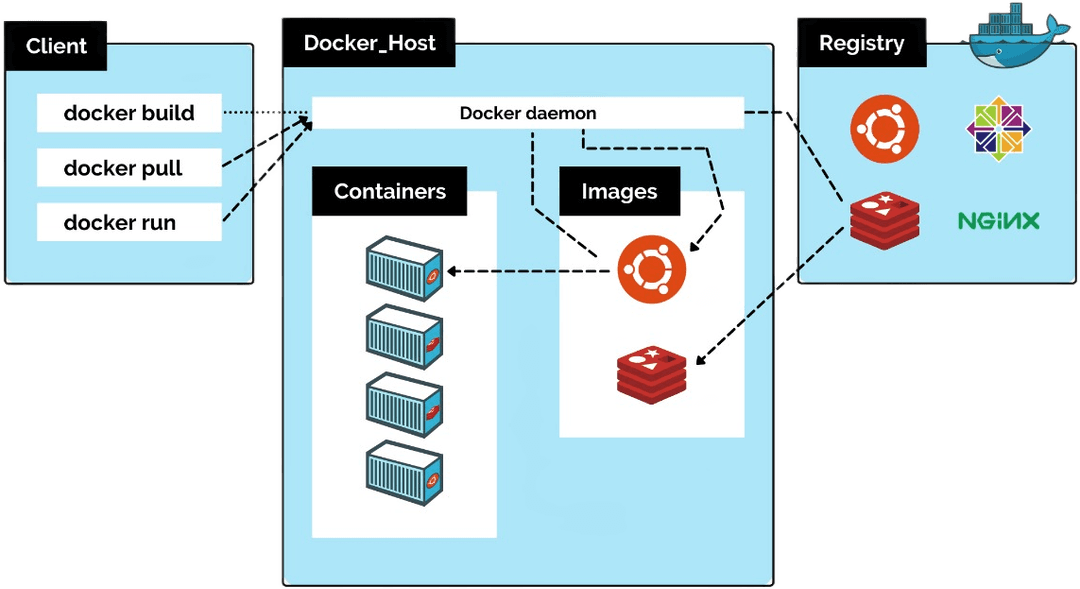

- Docker Daemon (

dockerd):- The Docker daemon is a background service responsible for managing Docker containers, images, networks, and storage volumes. It listens for Docker API requests and processes them.

- Docker Client (

docker):- The Docker client is a command-line tool that allows users to interact with the Docker daemon. Commands like

docker run,docker build, anddocker pullare issued from the Docker client to the Docker daemon.

- The Docker client is a command-line tool that allows users to interact with the Docker daemon. Commands like

- Docker Images:

- Docker images are read-only templates used to create containers. An image contains the application code, libraries, and dependencies needed to run the application.

- Docker Containers:

- Containers are lightweight, standalone, and executable packages that include everything needed to run a piece of software, including the code, runtime, system tools, libraries, and settings.

- Docker Registries:

- A Docker registry is a storage and content delivery system that holds named Docker images. Public registries like Docker Hub allow users to store and share images, while private registries are used for internal purposes.

How Docker Works:

- Build:

- Docker uses a file called

Dockerfileto build images. ADockerfilecontains a series of instructions on how to build the Docker image. When you rundocker build, Docker reads theDockerfileand executes the instructions to create an image.

- Docker uses a file called

- Ship:

- Once the image is built, it can be shared via a Docker registry. Running

docker pushuploads the image to a registry, making it available for others to pull and use.

- Once the image is built, it can be shared via a Docker registry. Running

- Run:

- To run a Docker container, you use the

docker runcommand followed by the image name. This command creates a new container from the specified image and starts it.

- To run a Docker container, you use the

- Isolate:

- Docker uses namespaces to provide isolation. Each container runs in its own isolated environment, ensuring that processes in one container do not interfere with those in another.

- Manage:

- Docker provides tools to manage the lifecycle of containers. Commands like

docker start,docker stop, anddocker rmare used to manage the state of containers.

- Docker provides tools to manage the lifecycle of containers. Commands like

(Image courtesy of google.com)

Dive into Key Technologies:

- Namespaces:

- Docker uses namespaces to provide isolation for running processes. Each aspect of a container runs in a separate namespace, such as process ID (PID), network, and filesystem.

- Control Groups (cgroups):

- Cgroups are used to limit and monitor the resource usage of containers. They ensure that containers do not exceed their allocated resources like CPU, memory, and I/O.

- Union File Systems:

- Docker uses UnionFS (Union File System) to create images. UnionFS layers multiple filesystems into a single filesystem. This allows for the efficient creation of images and containers, as multiple layers can be reused across different containers.

- Container Runtime:

- The container runtime is responsible for running containers. Docker initially used

lxc(Linux Containers) as the runtime, but now usesrunc, which is an implementation of the Open Container Initiative (OCI) runtime specification.

- The container runtime is responsible for running containers. Docker initially used

- Networking:

- Docker provides multiple networking options, such as bridge networks, host networks, and overlay networks, to facilitate communication between containers and other network resources.

What is a Dockerfile?

A Dockerfile is a text document that contains a set of instructions used by Docker to build a Docker image. It defines what the image will contain and how it will be configured. Think of it as a recipe for creating a Docker container. Each instruction in a Dockerfile creates a layer in the image, and these layers are stacked to form the final image.

Here's a brief overview of the process:

-

Write a Dockerfile:

FROM node:14 WORKDIR /app COPY . . RUN npm install CMD ["node", "app.js"] -

Build an Image:

docker build -t my-node-app . -

Run a Container:

docker run -d -p 3000:3000 my-node-app

By understanding these components and processes, you can better leverage Docker to streamline your development and deployment workflows.

Dockerfile Essentials: Build Your Containers Like a Pro

Here’s a cheat sheet for common Dockerfile instructions to make your life easier:

-

FROM: Specifies the base image for your Docker container.FROM node:14 -

WORKDIR: Sets the working directory for subsequent commands.WORKDIR /app -

COPY: Copies files from your host into the container.COPY . . -

RUN: Executes commands in the container during image build.RUN npm install -

CMD: Defines the default command to run when the container starts.CMD [ "node", "index.js" ] -

EXPOSE: Documents the port that the container will listen on.EXPOSE 3000 -

ENTRYPOINT: Sets the command to run when the container starts, overridingCMD.ENTRYPOINT [ "node", "index.js" ] -

VOLUME: Creates a mount point with the specified path and marks it as holding externally mounted volumes from the native host or other containers.VOLUME [ "/data" ]

Useful Docker Commands

Here are some essential Docker commands to keep in your toolkit:

-

Check Docker Version:

$ docker --version -

List images:

$ docker image ls -

Delete a specific image:

$ docker image rm [image name] -

Delete all images:

$ docker image rm $(docker images -a -q) -

List Running Containers:

$ docker ps -

List all containers:

$ docker ps -a -

Run a Container:

$ docker run <options> <image_name> -

Stop a specific container:

$ docker stop [container name] -

Stop all running containers:

$ docker stop $(docker ps -a -q) -

Delete a specific container:

$ docker rm [container name] -

Delete all containers:

$ docker rm $(docker ps -a -q) -

Display container logs:

$ docker logs [container name]

Advanced Commands:

-

List Docker Volumes:

$ docker volume ls -

Create a Docker Network:

$ docker network create <network_name> -

Connect a Container to a Network:

$ docker network connect <network_name> <container_id> -

Execute a Command in a Running Container:

$ docker exec -it <container_id> <command> -

Inspect Docker Objects:

$ docker inspect <object_name>

What’s Next?

In the next part of this guide, we’ll dive into Docker Compose and learn how to manage multi-container applications effortlessly.

Conclusion

Congratulations on taking your first steps with Docker! You’ve created your first Docker application and learned how Docker can simplify your development and deployment process. Keep experimenting and exploring Docker’s capabilities, and you’ll be a Docker pro in no time. If you have questions or feedback, feel free to ask. Happy Dockering! 🐳

Additional Learning Points

Docker Concepts

Docker enables developers and sysadmins to build, share, and run applications with containers. Containerization simplifies deploying applications in any environment.

Docker Hub

Docker Hub is the official cloud-based registry for Docker images, allowing you to share and distribute Docker images easily.

Docker Compose

Docker Compose is a tool for defining and running multi-container Docker applications. With Compose, you use a YAML file to configure application services, networks, and volumes, allowing you to start all services with a single command.

Docker Swarm

Docker Swarm is Docker’s native clustering and orchestration tool, providing high availability, scaling, and load balancing for containers.